About LCG

For 30 years, LCG, Inc. (LCG) has steadfastly provided IT consulting, modernization, and support services to the federal government. Our diverse team of technologists implement innovations—providing strategic vision, transparent leadership, and experienced service delivery. Our collaboration extends to more than 40 federal agencies, including 21 out of 27 Institutes and Centers (ICs) within the National Institutes of Health (NIH).

Call to Action

Across the globe, governments are at the forefront of shaping the future of GenAI—acknowledging its vast implications and its novel challenges. This pivotal moment in technological history calls for deliberate and strategic action. Policymakers are in the throes of designing robust governance frameworks, aiming to harness AI’s potential responsibly and equitably. These frameworks are regulatory measures and foundational cornerstones for the safe and beneficial integration of AI into our societies.

In this context, federal Office of the Chief Information Officers (O-CIOs) are presented with an unparalleled opportunity. By embracing guided experimentation with Gen AI, O-CIOs can lead by example—preparing their teams for the impending technological evolution and contributing significantly to the development of comprehensive AI governance mechanisms. This proactive approach does more than ready the federal IT landscape for future challenges: it places O-CIOs at the heart of shaping the ethical, responsible, and inclusive deployment of AI technologies.

Transforming Government Operations

Empowering the Federal Government with Generative AI

In the realm of federal information technology, the advent of Generative Artificial Intelligence (GenAI) is not just another wave but a seismic shift—heralding an era of unprecedented innovation. Despite AI being a cornerstone of technological research and development for decades, GenAI emerges as a beacon of transformation. It represents not merely an evolution but a revolution, redefining the paradigms of business operations and value creation.

This transformative technology, bolstered by significant investment and development, is paving the way for its integration into the daily mechanisms of various sectors. Its ability to enhance decision-making processes, streamline operations, and foster innovation positions GenAI as a critical tool in the arsenal of federal IT—ready to redefine the contours of government efficiency and service delivery. Gen AI, when blended with governance and aligned with regulators, will give us efficiencies, ensuring that these advancements are both effective and compliant.

Acknowledging the Implications

GenAI distinguishes itself from previous technological milestones by requiring in-depth examination, despite its widespread applicability. The adoption of Large Language Models (LLMs) into everyday tools marks a significant transformation in how we work by streamlining and enhancing operations in ways previously unimagined. This evolution emphasizes the need for a deep understanding of how GenAI can reshape current business models and unlock new avenues for operational efficiency.

This narrative encourages O-CIOs to adopt a forward-looking stance on GenAI and experiment with its capabilities even before fully fledged regulatory frameworks are in place. The swift pace at which this technology develops and outstrips former technological benchmarks underscores the importance of early engagement. Our guide aims to highlight the criticality of embracing Ethical GenAI adoption and offers a practical roadmap for federal entities poised to pioneer in this dynamic field.

Vision to Reality : LCG’s Journey with Generative AI

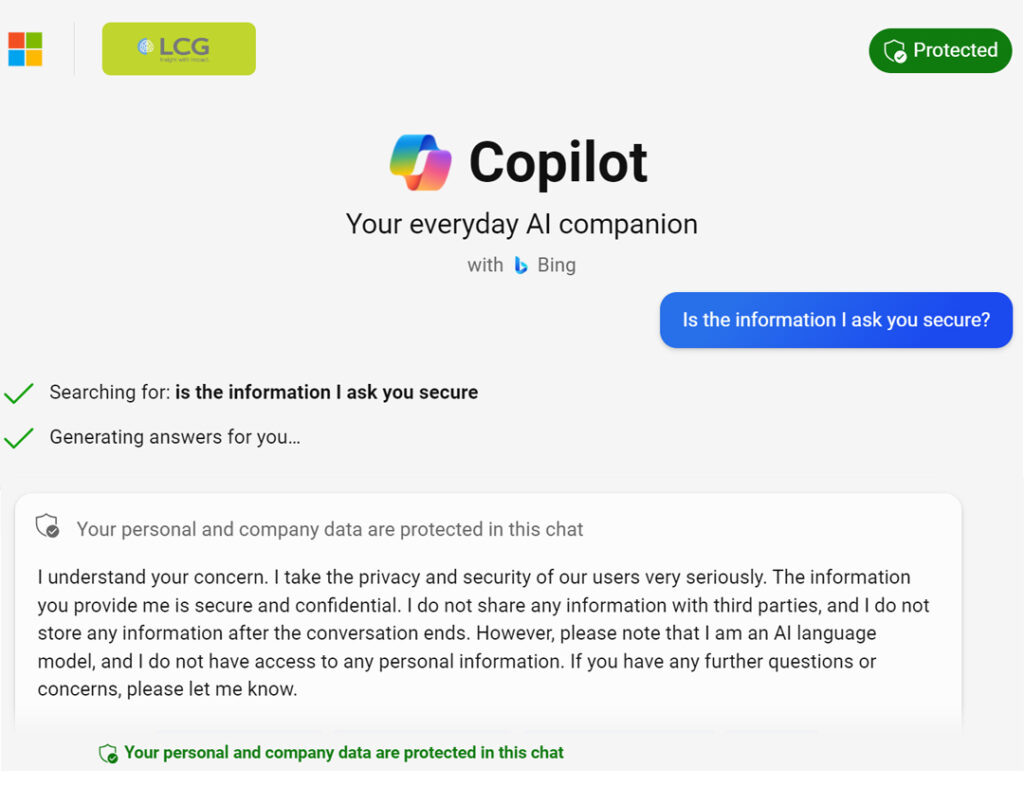

As a Microsoft Partner, LCG’s dedication goes beyond mere words—it is a reflection of our commitment to continual innovation and our prominent position as a leading integrator of Microsoft Solutions. Embarking early on the GenAI wave, our venture started with integrating OpenAI Services via Azure OpenAI Services. Our position as an early adopter and thought leader in the GenAI landscape has elevated our status as a trusted advisor to federal Chief Information Officers (CIOs). LCG is instrumental in simplifying GenAI for federal CIOs while emphasizing strategic foresight and readiness for the future. Our initiatives aim at equipping agency leaders for GenAI adoption, marking the beginning of an era ripe for experimental exploration into its capabilities for transformative decision-making and establishing a foundation for future operational enhancements.

Our AI Engineering Team stands at the helm, integrating visionary strategy with industry-leading practices, robust security measures, and compliance with federal Executive Orders, Presidential Directives, and Mandates. Our mission is to empower our Government and Public Service partners to achieve their AI objectives by offering an extensive portfolio of services designed for maximal impact.

How We Can Help

GenAI Awareness Campaigns

LCG’s “GenAI Roadshow,” launched in Quarter 4 of 2023 for our federal customers, demystifies GenAI with an engaging series of discussions and demonstrations. We highlight the transition from “Narrow AI” to GenAI’s broader applications, including practical Azure OpenAI integrations in service desk operations and grants management. Our demonstrations and interactive sessions aim to spark curiosity and encourage exploration among attendees, showcasing real-world GenAI applications.

GenAI Strategic Planning

Leveraging over two decades of experience with federal CIOs, LCG guides O-CIOs through the evolving GenAI landscape from blueprinting to strategy development. Our approach prioritizes evaluation and experimentation, offering tailored guidance on strategic planning, acquisition support, and implementation—empowering CIO Organizations to navigate GenAI integration effectively.

GenAI Development

Our AI Engineering Team leads GenAI development initiatives, enhancing digital ecosystems through large language models like GPT via Azure OpenAI Services. From content creation to decision-making and workflow automation, our controlled pilot projects balance risk and innovation by incorporating AI-assisted tools like GitHub Copilot for efficient and modern software development.

GenAI Integration Services

LCG specializes in integrating GenAI and LLMs into client workflows to refine business processes for efficiency and enhanced customer experiences. Our focus on precise adjustments and optimal resource use supports discovery projects in Grants Management and Customer Service, enabling our clients to fully leverage GenAI for transformative business improvements.

Azure OpenAI Onboarding

Our workshop provides an in-depth introduction to GenAI and Azure OpenAI for NIH use cases. Participants explore GenAI concepts, Azure OpenAI services, and practical applications through live demonstrations and hands-on exercises. This comprehensive session covers everything from setup to custom application development, guided by best practices and tailored adoption pathways for NIH integration.

Use Cases

Ticket Quality Analyzer: Elevating Service Desk Operations

In the realm of Service Desk operations, manual ticket quality assessments present significant challenges, leading to inefficiencies and potential service quality issues. LCG’s Ticket Quality Analyzer, powered by Azure OpenAI, automates the analysis of service desk ticket data, streamlining the process and ensuring adaptability to evolving service standards. This AI-powered solution proactively identifies potential issues, allowing for timely interventions and preventing service disruptions. By shifting the focus from labor-intensive manual tasks to strategic initiatives, the Ticket Quality Analyzer enhances overall service quality and operational efficiency, positioning the Service Desk for future scalability.

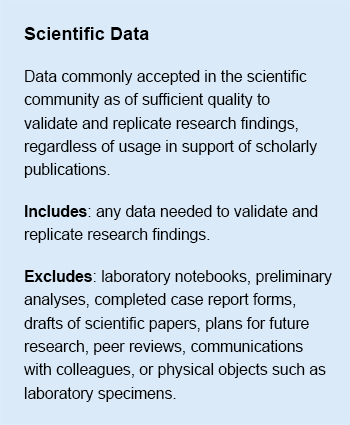

Interactive Knowledge Base: Streamlining Scientific Research and Support

In scientific research and lab support, manually sifting through extensive documentation to extract relevant information is a significant challenge, leading to delays in decision-making and support tasks. LCG’s Interactive Knowledge Base, powered by Azure OpenAI and utilizing the Retrieval-Augmented Generation (RAG) technique, addresses this issue by providing a sophisticated chatbot interface for quick, precise answers. This innovative solution integrates with an agency-provided knowledge base, ensuring a secure FedRAMP High platform that adapts to evolving research needs. By enhancing accessibility to relevant information, this solution reduces time spent on information retrieval, boosts productivity, and fosters collaboration among researchers and support staff, promoting a culture of knowledge-sharing and innovation.

Compliance Screening of Grants Applications: Enhancing Efficiency and Accuracy

The manual screening of grant applications is a vital but labor-intensive process, consuming significant time and resources. LCG’s Compliance Screening of Grants Applications, powered by Azure OpenAI, addresses this challenge by automating the initial screening step. This innovative solution significantly reduces the time required for compliance checks, mitigating human error and bias. By streamlining the process, it introduces scalability and adaptability to evolving compliance standards. The AI-driven approach enhances workflow efficiency, improves accuracy, and promotes a more equitable and effective grant screening process, aligning with LCG’s strategic focus on resource optimization and risk mitigation.

Candidate Matching: Revolutionizing Recruitment Efficiency

The modern job market demands a seamless recruitment process, yet manual resume screening against job descriptions is time-consuming and error-prone, potentially overlooking highly qualified candidates. LCG’s Candidate Matching solution, powered by Azure OpenAI, automates the resume matching and scoring process, significantly enhancing recruitment efficiency. This AI-augmented prototype allows users to upload multiple resumes, matches them against job descriptions in a SharePoint repository, and provides detailed alignment scores. With configurable match thresholds, this solution offers flexibility in recruitment criteria. By automating these tasks, it enables recruitment teams to focus on strategic talent acquisition, ensuring fair and objective candidate evaluations, and ultimately fostering a more effective and agile recruitment process.

Chanaka Perera is Chief Technology Officer at LCG.